Installation Methods

Methods for installing Kyverno

Kyverno provides multiple methods for installation: Helm and YAML manifest. When installing in a production environment, Helm is the recommended and most flexible method as it offers convenient configuration options to satisfy a wide range of customizations. Regardless of the method, Kyverno must always be installed in a dedicated Namespace; it must not be co-located with other applications in existing Namespaces including system Namespaces such as kube-system. The Kyverno Namespace should also not be used for deployment of other, unrelated applications and services.

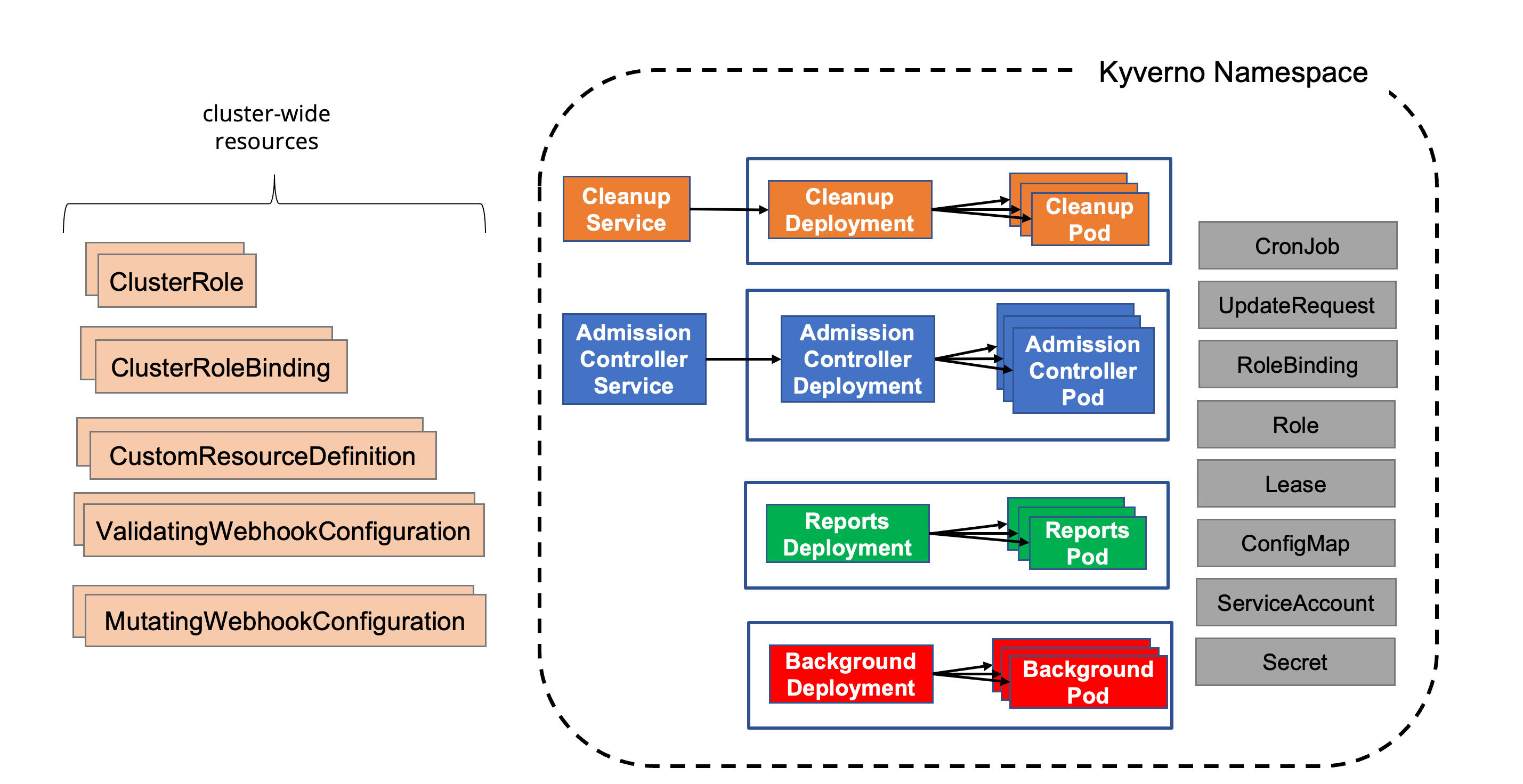

The diagram below shows a typical Kyverno installation featuring all available controllers.

A standard Kyverno installation consists of a number of different components, some of which are optional.

Kyverno follows the same support policy as the Kubernetes project (N-2 policy) in which the current release and the previous two minor versions are maintained. Although prior versions may work, they are not tested and therefore no guarantees are made as to their full compatibility. The below table shows the compatibility matrix.

| Kyverno Version | Kubernetes Min | Kubernetes Max |

|---|---|---|

| 1.12.x | 1.26 | 1.29 |

| 1.13.x | 1.28 | 1.31 |

| 1.14.x | 1.29 | 1.32 |

NOTE: For long term compatibility Support select a commercially supported Kyverno distribution.

For a production installation, Kyverno should be installed in high availability mode. Regardless of the installation method used for Kyverno, it is important to understand the risks associated with any webhook and how it may impact cluster operations and security especially in production environments. Kyverno configures its resource webhooks by default (but configurable) in fail closed mode. This means if the API server cannot reach Kyverno in its attempt to send an AdmissionReview request for a resource that matches a policy, the request will fail. For example, a validation policy exists which checks that all Pods must run as non-root. A new Pod creation request is submitted to the API server and the API server cannot reach Kyverno. Because the policy cannot be evaluated, the request to create the Pod will fail. Care must therefore be taken to ensure that Kyverno is always available or else configured appropriately to exclude certain key Namespaces, specifically that of Kyverno’s, to ensure it can receive those API requests. There is a tradeoff between security by default and operability regardless of which option is chosen.

The following combination may result in cluster inoperability if the Kyverno Namespace is not excluded:

Pods is configured in fail closed mode (the default setting).kube-system). This is not the default as of Helm chart version 2.5.0.If this combination of events occurs, the only way to recover is to manually delete the ValidatingWebhookConfigurations thereby allowing new Kyverno Pods to start up. Recovery steps are provided in the troubleshooting section.

By contrast, these operability concerns can be mitigated by making some security concessions. Specifically, by excluding the Kyverno and other system Namespaces during installation, should the aforementioned failure scenarios occur Kyverno should be able to recover by itself with no manual intervention. This is the default behavior as of the Helm chart version 2.5.0. However, configuring these exclusions means that subsequent policies will not be able to act on resources destined for those Namespaces as the API server has been told not to send AdmissionReview requests for them. Providing controls for those Namespaces, therefore, lies in the hands of the cluster administrator to implement, for example, Kubernetes RBAC to restrict who and what can take place in those excluded Namespaces.

kubernetes.io/metadata.name immutable label is recommended.The choices and their implications are therefore:

You should choose the best option based upon your risk aversion, needs, and operational practices.

Methods for installing Kyverno

Special considerations for certain Kubernetes platforms.

Configuration options for a Kyverno installation.

Scaling considerations for a Kyverno installation.

Upgrading Kyverno.

Uninstalling Kyverno.

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.